Nitter thread from Julio Merino on application responsiveness in early 2000’s Windows computers versus modern Windows computers. Videos available in linked thread.

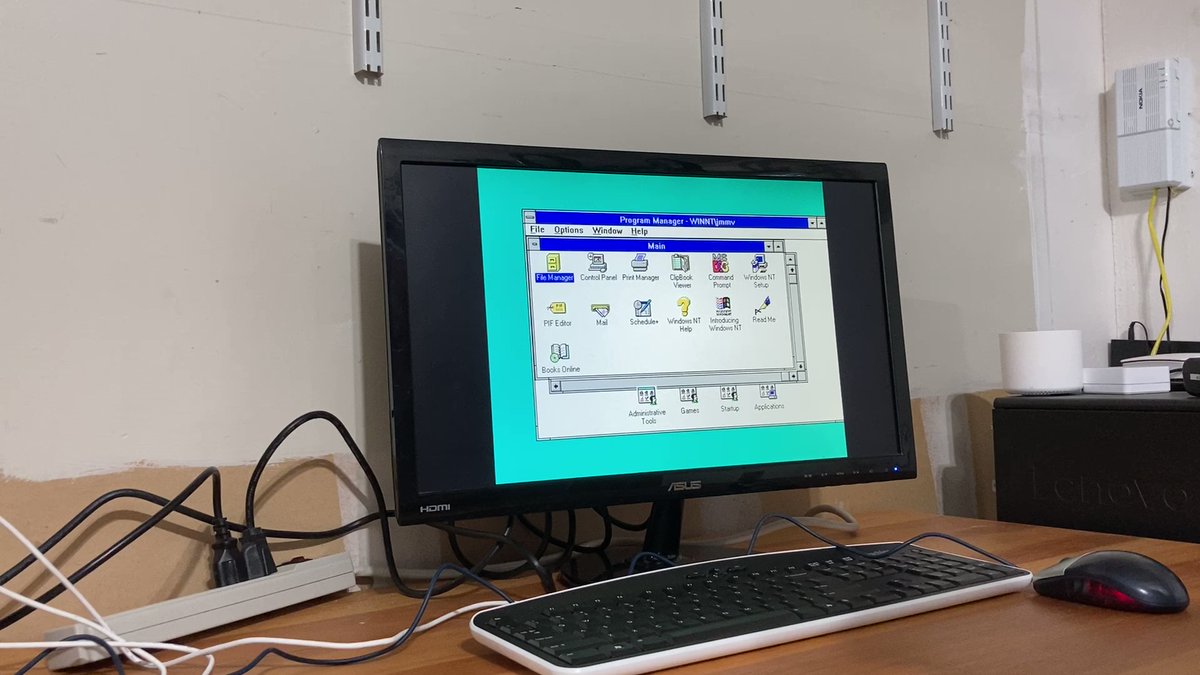

Please remind me how we are moving forward. In this video, a machine from the year ~2000 (600MHz, 128MB RAM, spinning-rust hard disk) running Windows NT 3.51. Note how incredibly snappy opening apps is.

Now look at opening the same apps on Windows 11 on a Surface Go 2 (quad-core i5 processor at 2.4GHz, 8GB RAM, SSD). Everything is super sluggish.

For those thinking that the comparison was unfair, here is Windows 2000 on the same 600MHz machine. Both are from the same year, 1999. Note how the immediacy is still exactly the same and hadn’t been ruined yet.

Maybe the difference in security is the culprit, my home PC is snappy, my work PC not at all (and is better).

That’s maybe why my old Linux box running an old 4gen intel is insta-quick, no slow heuristic “AI” scanning-uploading-waiting for an answer every time I do the smallest thing…

I suspect it’s networking. Every damn app links to something on a network and network calls are slooooow. Try opening apps on a machine that has a network connection but no internet access and it’s a really bad experience. It’s like they have to phone home before they finish loading and they’ll sit there stopping everything until they timeout.

Those aren’t really the same apps between the two systems, even if most of them still have the same names. Not only do the modem versions have more features, but they also have updated designs, window chrome, and use more secure underpinnings.

Compare the boot times, network speeds, ease and speed of installing new software, and security of the same old vs. new systems, and the new stuff will come out on top every time.

I think it might be that windows has kept just building on top of the same stuff over and over without fixing or taking out unessential things. Like how they cant take internet explorer out of windows because it is critical to the OS.

Like how they cant take internet explorer out of windows because it is critical to the OS.

I thought that was no longer true and they did take it out?

All they did was make it so if you tried to open IE on windows it redirects to edge. They cant get ride of it becuse lots of core programes use it still. Also lots of. Net applications still need it. Like HP printer management software HP webjet.

Yep. The EU lawsuit forced them to. Also internet exploder is no longer a thing these days 🙂

I don’t know how some developers manage it. I’ve written web apps in React and, without even using available optimisations, the UI is acceptably snappy on any modern desktop.

We inherited an application from another vendor (because of general issues with the project) and it’s just S L O W. The build is slow and takes several minutes, the animations are painful and even the translations are clearly not available for the first 5 seconds.

My question is, how? I’m not an expert, I generally suck at frontend and I just had to fill in for it. I didn’t purposely write optimised code, the applications are similar in the amount of functionality they provide and they both heavily use JavaScript. How do you make it that slow?

Overuse of the spread operator and creating functions inside of functions are two of the biggest culprits of performance issues in modern is

Yeah, the spread operator is heavy. Admittedly, one iteration of our software abused it and still seemed to run ok. We didn’t end up changing that for performance reasons and it was more about code complexity. I wonder how excessive you have to get?

I recently set up a Windows 2000 computer with a 7W Via Eden CPU that scores 82 on Passmark, compared to about 46,000 for my main computer’s 5950x. The Windows 2000 PC has an old IDE HDD and 512MB RAM. Although this computer couldn’t run any remotely recent OS (even Antix Linux brought it to its knees), it flies along with Windows 2000 and feels just as fast for everyday things as new PCs do.

The problem is that we’re being given vast amounts of CPU cycles and RAM, but our operating systems are taking them away again before the user gets a say. Windows 11 does so much in the background that it no longer feels like I am in control of the computer. I get to use it when it doesn’t have anything more important that Microsoft wants it to do. Linux is still better, but there’s a whole lot more going on in the background of a typical system then there used to be.

It’s not just the operating systems, it’s also the way software is developed now. Those old windows applications were probably written in C++, which is only lightly abstracted over C, which is about as close as you’re going to get to machine code without going into Assembly.

These days, you might have several layers of abstraction before you get to the assembly level. And those abstractions are probably also abstracted by third party libraries which might be chained to even more libraries, causing even more code to need to load and run. Then all of that might not ultimately even be machine code, it might be in a language like C# or Java where they’re in an intermediate language that needs to be JIT compiled by a runtime, which also needs to be loaded and ran, before it can be executed. Then, that application might provide another layer of abstraction and run something in a browser-like instance, ala anything Electron based.

deleted by creator

Are or were you able to compare it to SublimeText and UltraEdit by chance?

deleted by creator

Good to know, because UltraEdit has been my goto editor for large files so far. Especially large “single line” files (length delimited data files for example). So I probably don’t need to look for an alternative then.

Btw Sublime is cross platform. Even the license is cross platform. Buy once and use on every Windows, Linux and Mac machine you use. I find it much much snappier than VScode. But for large files it can’t beat UE (and therefore emeditor). But for most editing tasks the UX beats the rest, IMO.

deleted by creator

Have you tried Geany, by any chance? I recall it being pretty quick back in the days of single-core machines being the norm, but I’m curious how it stacks up now in terms of snappiness.

In dealing with large files or in general?

Edits: I overgeneralized ignorantly and stand corrected.

That’s a good point.

No abstraction is performance-neutral;Many abstractions are not performance-neutralthey allbut have some scenarios where they perform fast and others where they are slow. We’re witnessing the accumulation of hundreds of abstractions that may be poorly optimized or used for purposes outside of their optimal performance zones.No abstraction is performance-neutral

That’s not true. Zero-cost abstractions are a key feature of C++ and Rust. For example, Rust

Option<compiles down to nothing more than a potentially-null pointer.

When feature development and short term gains are your only goals, this is what you get. Developers produce what they are incentivized to produce and “snappy” isnt as high on the list sadly.

I remember a Mastodon thread where this same issue was brought to the table. The thread talked about how before there were classes of bugs that would mess up RAM. Today, we eliminated a whole class of bugs by checking RAM. That is why our software is less snappy.

I wonder to what extent zero abstraction languages like Rust could make software much snappier by minimizing memory-related problems at compilation time rather than run time.

We went from a computational tool serving a wide range of tasks to an entertainment widget barely more interactive than a TV were work is an afterthought.

The former was expected to not get in the way of their users, the latter is designed to retain attention as long as possible to maximize consumption